There are many myths about music and how hearing aids should be fit. This is about three of those myths.

- As technology gets better so will music fidelity

- Wider is better

- More advanced features are better

Every single hearing aid design engineer has to come to grips with the similarities and differences between speech and music. Although this has always been the case, even more importantly with the advent of portable (and more accessible) music, both speech and music are desired stimuli for hard of hearing consumers. Both speech and music are necessary for most people to get the most out of life.

The design engineer’s boss however, would rather that they spend their time and energy dealing with speech. Speech quality is what sells hearing aids- music fidelity just goes along for the ride. Speech quality is number one, and music quality is its poor cousin and really only addressed once the “number one” issue is taken care of.

Here are three myths about music and hearing aids:

1. As technology gets better so will music fidelity:

This sounds like a mother and apple pie statement. After all, hearing aids of the 1930s are not as good as those today. However since the late 1980s, hearing aid microphones could transduce 115 dB SPL with virtually no distortion. Since the 1990s, hearing aid receivers could be made broadband enough to provide significant output in the rarefied regions above the piano keyboard (>4000 Hz). And wide dynamic range compression (WDRC) hasn’t really changed in over 20 years (and I am going to get some “comments” about this statement- but I am really only referring to the level dependent characteristic of all modern non-linear hearing aids). So what needs to “get better”?

Well, not much but this is not an “evolutionary” change that gradually “hearing aids will simply get better”. Something specific needs to be done and this relates to the “front end” of the hearing aid. Specifically the analog-to-digital converter needs to improve so that it can handle the more intense components with minimal distortion. Several manufacturers have successfully addressed this problem but most have not. Unless this problem is resolved, aided music fidelity will not improve.

2. Wider is better:

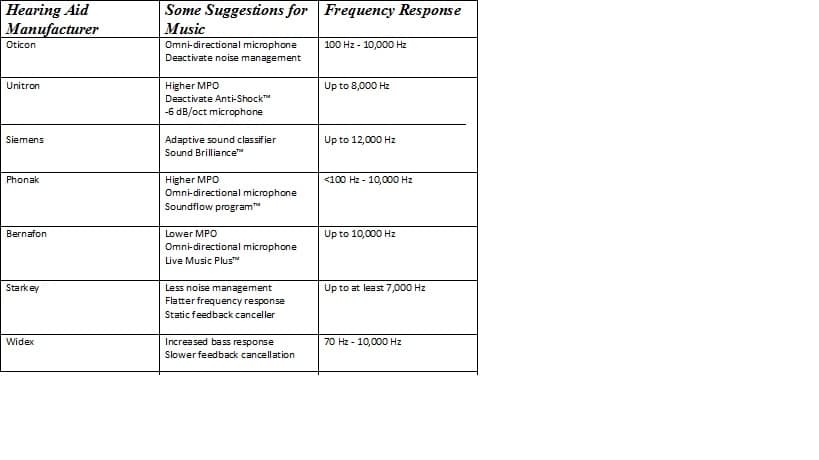

The broader the bandwidth for music, the better it will be. The chart below shows a sampling of some manufacturer’s suggested bandwidths for music (as well as some other suggestions for the music program). These are not based on comprehensive science and many represent mere ‘opinions” of the respective marketing departments.

If the hearing loss is mild AND if the configuration of the hearing loss relatively flat, then a broad bandwidth does make sense and this is indeed supported by the literature. If however, the loss is greater than about a 60 dB HL sensori-neural one, then because of the possibility of dead regions in the cochlea, a narrower bandwidth may be better. The same can be said of steeply sloping sensorineural hearing losses- a narrower bandwidth may be better than a wider one.

- Table 1. A survey of some hearing aid manufacturers with some suggested changes. Most state that there should be less compression and less noise management than for a speech program. As discussed in the text, these suggestions are probably quite valid but only for mild hearing losses, with a gently sloping audiometric configuration. Some manufacturer’s use a proprietary circuit and these are marked with a ™symbol where appropriate. Note- this is not a complete listing of the manufacturers nor is this a full listing of all of the parameters suggested by the manufacturers for music.

Table 1 does make sense, but only for mild hearing losses with a relatively flat audiometric configuration.

3. More advanced features are better:

Noise reduction, feedback management, and impulse control to limit overly intense environmental signals may be useful for speech, but with the lower gains required with music (in order to obtain a desired output) these features may degrade music. Feedback management systems may blur the music (adaptive notch filters), cause rogue “chirps” in music (phase cancellation systems), and may even turn off the musical instrument (e.g. the harmonics of the flute may erroneously be viewed as feedback signals). Many manufactures (e.g. Siemens and Oticon) have restricted the function of their feedback management systems to 1500 Hz and above to minimize the above problems, but this is a case where “less is more”. With the lower gains required of music (about 6 dB lower than the respective speech-in-quite program), feedback management systems may not even be required.

The same can be said about noise reduction but again, if fewer advanced features are implemented, the internal noise floor will be lower. And let’s turn off the impulse control systems (as correctly pointed out by Unitron with a disabling of their Anti-Shock function). Impulse sounds occur frequently in music but rarely in speech- if they do occur in speech, they are of a low level such as for the affricates (‘ch’ and ‘j’).

More can be better, but when it comes to music, less is usually more.