A previous post floated the idea that ear-level amplification processing, including cochlear implants, represents the first and most advanced wearable Augmented Reality (AR) available to a widespread consumer market to date. It also noted that AR is considered an “emerging” field in which many psychoacoustic thoughts and hearing aid components are being rediscovered and rethought by smart people in other fields.

For example, those who design and fit hearing aids on two-eared people are likely to be underwhelmed by AR’s (re)discovery of so-called “3D audio,” as reported by The Verge:

Slowly but surely, binaural is becoming a linch pin in virtual reality development.

Old news for us, but good news for technological development, to the advantage of all. A few examples of in-depth aural AR thinking and development are described in today’s post.

Hearing Aids and Accessories Reinvented

At MIT

A 2002 paper from MIT Media Lab acknowledged the hearing aid’s basic role in augmented reality:

“Audio augmentations are typically created by playing audio cues to the user from time to time. … If one wants constant alteration of a person’s perceived audio, one must look to hearing aids.”

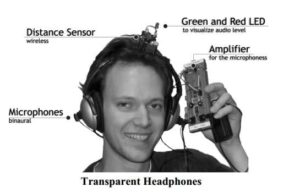

Fig 1. 2002 innovation in headphones with hearing aid-like features for “transparent” and “opaque” hearing.

The MIT researchers aimed to go further by creating an ear-level device that distinguished between “transparent” and “opaque” hearing. Though a new concept in their lab, the notion was already far along in the hearing aid world. In our lingo, the effects of ear-level amplification on perceived sound were roughly analogous to open fit (“transparent”) and closed fit (“opaque”) technologies.

Other user-controlled “realities” envisioned in the MIT paper (e.g., music, telephone, mute, adaptive directionality) were poised for take off in hearing aids in 2002 as manual and automatic programming and Bluetooth functionality came on board. The MIT lab was on target but further behind corporate R&D than they may have realized. Plus, the corporate product — hearing aids — looked a lot more user-friendly than the MIT prototype. Fig 1 is reproduced here from a previous post just because it’s so interesting to look at.

Just because Fig 1 looks dorky doesn’t mean the research is or was retro. The researchers’ quest for a means of distinguishing between “transparent vs opaque” listening remains highly relevant, and unsolved, as hearing aids merge with Hearables.

As wearers gain the ability to self-select listening environments, the hearing aid industry faces a RIC-induced conundrum. RICs are preferred by the majority of hearing aid consumers nowadays, accounting for 58% of all units sold in the US in 2014.1 They all but eliminate own-voice effects and afford users natural (transparent) amplification of their surround. The conundrum is that occluding earphones like Apple Earpods are preferred by consumers who listen to music and other streamed content, independent of Price. They deliver more high fidelity in an occluded ear and attenuate the wearer’s sound surround, producing an “opaque” listening environment.

What’s desirable is a Hearable that acts like a premium programmed RIC for listening to one’s environment and acts like an Earpod for listening to streamed input. That product remains to be developed and delivered to the market.

In Finland

Helsinki University acoustics and telecommunications researchers came up with the concept of Mobile Augmented Reality Audio (MARA) in a 2004 paper that described a number of psychoacoustic experiments with protype equipment.2 MARA was envisioned as a wearable, binaural device, worn full time, that would stream wireless “virtual reality” audio content (e.g., music, speech, phone) in real time. Streaming would be superimposed on the wearers’ “pseudoacoustic environments,” with MARA designed to ensure that wearers heard in a “natural way” through the devices.

The basic goals of MARA were not dissimilar to the MIT group’s desire for opaque and transparent processing. The Helsinki design, as described, approximates hearing aid design:

“microphones…mounted directly on earplug-type earphone elements…placed very close to the ear canals… fit in the user’s ear…[with] no additional wires.”

Perhaps more than anything, the Helsinki results showed the limitations of their prototypes, highlighting the need to understand and control a host of acoustic and psychoacoustic parameters. Those variables are the usual suspects for all who do hearing aid research or perform clinical endeavors: reverberation, localization, lateralization, binaural processing. The authors concluded that “Details of binaural signal processing are beyond the scope of this paper.”

Of interest were the paper’s final comments, which riffed on prospects for intelligent, interactive audio. Such visions of AR were prescient, anticipating merging of hearing aid technologies with emerging Hearables aspirations:

The proposed model could also be used to produce a modified representation of reality, which could be advantageous, more convenient, or just an entertaining feature for a user. For example, in some cases the system would provide hearing protection, hearing aid functions, noise reduction, spatial filtering, or it would emphasize important sound signals such as alarm and warning sounds. “

Evolution, Augmentation, Telepathy, Future-Speak

At first glance, it seem as though the wheel is getting reinvented when smart people in other fields in different parts of the world simultaneously retrace steps, identify old concepts, and tackle problems that are already solved, often elegantly, in hearing aid design. But reinvention introduces new thinking and innovation that improves wheels as well as hearing aids. Here’s how the wiki Future summarizes us and them now and going forward:

Sophisticated augmented reality technologies already exist for audio. Digital hearing aids can be configured to include or exclude different kinds or of sounds, like refrigerator and fan noise, and different modes of hearing can be chosen. Cell phones can be used in conjunction with hearing aids (and inductive loopsets), allowing people to talk on a cell phone while blocking all background noises sounds … [using] Bluetooth… [which eliminates the] need to wear headphones or use a headset). This can be combined with subvocal speech to essentially make practical telepathy possible.

That last bit about telepathy via subvocal speech recognition is NASA talking. Teleporting with William Shatner may actually be in our future.

Meanwhile, the consumer electronics application HyperSound was introduced last week by guest columnist Brian Taylor, who is its new representative. “Directed audio” uses parabolic speakers with ultrasound carriers to aim “highly controlled, narrow beams(s)” that are inaudible unless you are in the beam or in the path of “reflected sound from a virtual source.”

It doesn’t take a mind reader to imagine various good and bad scenarios if NASA, hearing aid manufacturers, and directed audio get together. That’s one of many visions for augmenting reality using ear-level devices as the conduit for highly personalized, exquisitely targeted, near and far-field individual communication systems that work in real time. This is technological disruption writ large, history in the making. It will reshape thinking, design, and fitting of hearing aids in both positive and negative ways. Fears of World Dominance, or at least threats to privacy, are certain to influence the timing, design, and selling and fitting of secure hearing aid and directed audio systems.

Who would have thought that Audiologists and hearing aid manufacturers were in from the beginning and remain front and center? Next post will consider the topic from an economic point of view, looking at market power and Big Data beckoning.

This is the 8th post in the Hearable series. Click here for post 1, post 2, post 3, post 4, post 5, post 6, post 7, or post 9.

References and Footnotes

1Strom, K. Hearing aid sales rise by 4.8% in 2014; RICs dominate market. The Hearing Review, March 2015, p 6.

2Harma A, et al. (2004). Augmented reality audio for mobile and wearable appliances. J Audio Eng Soc, 52(6), 618-639.