As ear level devices begin to deliver and curate Content choices, the Demand curve will expand and usher in a new world of security and privacy concerns for consumers, providers, suppliers, and government regulations. Big Data will be in our midst. (previous post)

Big Data. So many reasons to embrace it; so many concerns to keep it at arm’s length, at least until privacy and security fears and tensions are recognized and thoroughly addressed in our industry. Policy-makers have anticipated, debated and prepared for Big Data as far back as 1972. Resulting guidelines and regulations for health and communications industries are under continuous scrutiny, subject to change in response to technological evolution.

The framework exists and it’s up to us to slot ourselves into the program. Today’s post gives a brief structural overview of privacy and security guidelines as they apply to hearing healthcare stakeholders.

Privacy for Ear Level Devices and Data

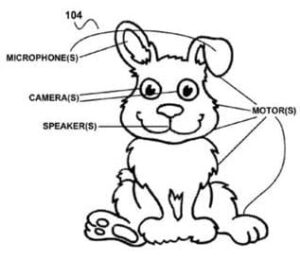

Fig 1. Google patent application for “creepy toys that spy on children” by recording conversations (see mic in the ear). Who’s authorized to listen? Who controls the toy? Who owns the toy?

Privacy concerns center on individual wearers of ear level devices. Brookings TechTank defines privacy 1 as: “… the ability to understand and exercise control over how information may be used by others.“

Within that user-centered definition, Brookings identifies two types of privacy violations. Hearing healthcare policy makers are familiar with both by virtue of several regulatory frameworks for protection of health information data2, which themselves are grounded in the Code of Fair Information Practices Principles (FIPP) and FTC guidelines for online privacy in the electronic marketplace.3 These are the two types of violations:

1. Privacy violation by authorized parties. Authorized parties, such as audiologists and dispensers, may use patient information in ways that are not wanted by the information owner, including unwanted dissemination, sale or loss of records.

HIPAA’s4Privacy Rule protects patient privacy for these and other violations. It’s strictures are familiar to all providers who work in hospital settings, provide services under health plans, bill for services through healthcare clearinghouses, and/or process transactions electronically.

Federal agencies authorized to gather and handle personal information could also use individual health information in ways not approved or desired by the information owner. Looming over and beyond HIPAA requirements, the Privacy Act (PA)5 limits sharing and disclosing of individuals’ data between and outside agencies, including the VA, FDA, CMS, and the Department of Defense.

2. Privacy violation by unauthorized parties. Practitioners are tasked with safeguarding patient records and data, under HIPAA, from physical and electronic breach. Such breaches run the gamut from the accidental (leaving charts out) to intentional (theft of records); promulgated by knowing or unknowing individuals (family members; the garbage man); sophisticated (electronic hacking) to naive (walking off with someone’s records).

At the Big Data level, Federal agencies must protect medical information from hacking and other unauthorized invasion, as required by the Federal Information Security Management Act (FISMA) of 2002. Pseudonymization of data becomes a necessary and important form of protection against privacy breaches of large and/or pooled databases.

Security for Ear Level Devices and Data

Brookings defines security as “the degree to which information is accessible to unauthorized parties.” Privacy and security are not the same: privacy can be breached without a security breach (item 1, above); security can be breached without invading privacy (item 2, if data is pseudonymized).

Whereas privacy has to do with end-user control, security concerns center on the other side of the supply line, thus representing a key obstacle to development of Big Data within the hearing healthcare industry. In the present hearing healthcare environment, manufacturers shield proprietary information in hopes of securing a competitive edge; suppliers keep quantity sold and wholesale pricing confidential, in hopes of maintaining higher profit margins; providers hold sales and fitting data close, in hopes of securing patients to their practices.

Beyond those market-oriented security concerns, FIMSA regulations bridge the security-privacy connection for information systems of federal agencies that use medical data. Clinicians and health settings must adhere to security standards prescribed in the HIPAA Security Rule which are designed to limit accessibility to authorized persons and entities. Efforts to integrate hearing healthcare data into Electronic Health Record systems and Health Information Exchanges (HIE), is expensive, intimidating, and definitely in its infancy.

Next Up – Ownership

Technological innovation is driving two very different electronic environments, both of which will work with large datasets that will place further demands on privacy and security of future hearing care:

- shared data via Health Information Exchanges

- cloud-stored data for ordering and fitting of consumer hearing/listening devices

At present, the hearing healthcare industry is an outlier to these processes. Willingness to endorse and participate in these Big Data-driven environments will vary among stakeholder groups (manufacturers, suppliers, consumers), depending on their perceived ownership of data, devices and processes. That’s the topic of the next post in this series.

This is the 10th post in the Hearable series. Click here for post 1, post 2, post 3, post 4, post 5, post 6, post 7, post 8, post 9, post 11.

References and Footnotes

1Bleiberg J & Yaraghi N. 4/13/2015. Balancing privacy and security with health records. Brookings TechTank.

2See Chapter 10, Security and Privacy Concepts in Healthcare IT. In Sengstack P & Boicey C (Eds) 2015. Mastering Informatics. A Healthcare Handbook for Success. Indianapolis, IN: Sigma Theta Tau Int’l.

3HEW (then US Department of Health, Education, Welfare) established the Advisory Committee on Automated Data Systems in 1972. The committee’s report, published in 1973, enumerated five principles in its Code of Fair Information Practices. The FTC reported Privacy Online: Fair Information Practices in the Electronic Marketplace to Congress in May 2000.

4Health Insurance Portability and Accountability Act, enacted by US Congress in 1996.

5The Privacy Act of 1974, 5 U.S.C. § 552a.

feature image courtesy of FTC; bunny courtesy of Google patent art