Before you start your Christmas shopping or light those Hanukah candles, grab your favorite festive beverage, perhaps eggnog, and catch up on some assorted industry news.

Starkey Campaign and Lobbying Contributions Exceed Medical Device Giants

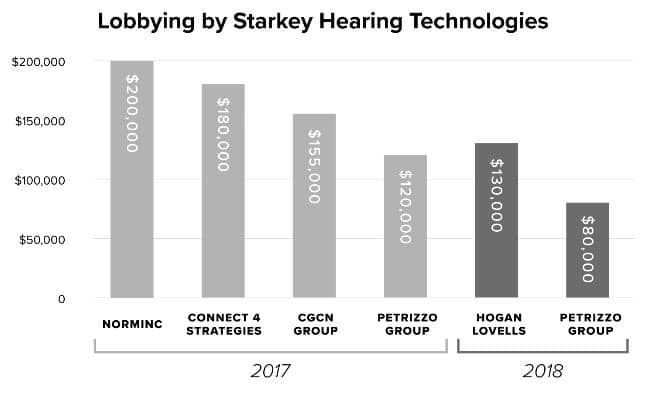

In what is described as a political battle against Bose, Medtech Dive reports on a $687,000 lobbying campaign by Starkey during the recently concluded November election. According to the report, their lobbying campaign exceeded the totals by medical device giants like Medtronic, Boston Scientific and Edwards Lifesciences.

According to the report, the uptick in lobbying spend comes as Starkey fights to shield itself from looming OTC hearing aid deregulation that could jeopardize its business. During the 2018 election cycle 80% of the lobbying funds went to Republicans, leaving 20% for Democrats.

The chart below, which was published in the Medtech Dive article, shows a breakdown of lobbying funding in 2017 and 2018.

Starkey, of course, is not the only company interested in hearing aid regulations and using lobbying dollars to influence political decisions. Bose has spent $530,000 since 2016 to retain the Alpine Group to lobby on FDA issues, the passage of OTC legislation and implementation of the 2017 OTC Hearing Aid Act.

VA Hearing Aid Sales Also Mentioned in Report

According to the November 29 Medtech Dive article, a 2018 Starkey lobbying disclosure stated that the company has been lobbying the Department of Defense on an “advocacy initiative to advance adoption of hearing health standards throughout the U.S. Government for acquisition and deployment of hearing aids.”

VA spokesperson Terrence Hayes told MedTech Dive that in fiscal 2018, the department purchased 786,741 hearing aids through its national contract at a cost of $291,875,369.11, or about $371 per hearing aid on average. According to data from the VA, Starkey represented $52,057,705 of that total, or 15.85% of the total number of VA-purchased hearing aids.

Vocal Emotion Recognition Scores Predicts Quality of Life in Adults Cochlear Implant Users

According to 2016 NIH data, there are approximately 750,000 Americans with profound hearing loss, yet less than 8% of them have received a cochlear implant. Outcomes data, moreover, indicate that adults with profound hearing loss tend to receive higher levels of benefit from cochlear implants compared to similarly matched adults who use hearing aids. One of the key measures, aided word recognition scores, usually shows a clear advantage for cochlear implants.

A recent study, published in the Journal of the Acoustical Society of America, suggests that vocal emotion recognition scores was an important predictor of Quality of Life (QofL) scores for cochlear implant recipients.

Vocal emotion recognition testing uses ten semantically neutral English sentences in five target emotions (angry, happy, neutral, sad, and anxious).

The results of the study highlighted the importance of vocal emotion recognition ability to post-lingually deafened adult CI users’ QoL. Vocal emotion recognition more accurately and broadly predicted the daily experience with CIs than did sentence recognition and, the Arizona State University researchers propose the vocal emotion metric should be considered a useful clinical measure of CI outcomes.

In addition to the Minimal Speech Test Battery that is used as part of the CI evlaluation process, the use of vocal emotion recognition is a viable way to demonstrate the benefits of a still under-utilized intervention post-lingually deafened adults.

Wrist Worn Wearables Morphing into Hearables, Says Forbes Report

Hearing aid technology and consumer audio technology continue to converge. Focusing more on the consumer audio side of the equation, a November 26 Forbes report describes a number of features included in the latest hearables to hit the market. Some of these features include measure of heart rate & body temperature, built-in voice-enabled virtual assistance (e.g. Alexa and Siri) and fall detection.

Some of these features found in hearables are making their way to hearing aids. For example, the Starkey Livio, launched in August incorporates fall detection into its functionality.

New Research Shows the Brain Uses Its ‘Autocorrect’ Feature to Identify Sounds

Aoccdrnig to a rscheearch at Cmabrigde Uinervtisy, it deosn’t mttaer in waht oredr the ltteers in a wrod are, the olny iprmoetnt tihng is taht the frist and lsat ltteer be at the rghit pclae.

Even though the sentence above has jumbled letters, it probably wasn’t too difficult to comprehend it. Psycholinguists explain that the meme above is, in itself, false, as the exact mechanisms behind the brain’s visual “autocorrect” feature remain unclear.

Rather than the first and last letter being key to the brain’s ability to recognize misspelled words, explains new research, context might be of greater importance in visual word recognition.

The new research, published in the Journal of Neuroscience, looks into the similar mechanisms that the brain deploys to “autocorrect” and recognizes spoken words. The New York University researchers looked at how the brain untangles ambiguous sounds. For instance, the phrase “a planned meal” sounds very similar to “a bland meal,” but the brain somehow manages to tell the difference between the two, depending on the context. The researchers wanted to see what happens in the brain after it hears that initial sound as either a “b” or a “p.” The new study is the first one to show how speech comprehension takes place after the brain detects the first sound.

The NYU researchers carried out a series of experiments in which 50 participants listened to separate syllables and entire words that sounded very similar. They used a technique called magnetoencephalography to map the participants’ brain activity. The study revealed that the primary auditory cortex detects the ambiguity of a sound just 50 milliseconds after onset. Then, as the rest of the word unravels, the brain “re-evokes” sounds that it had previously stored while re-evaluating the new sound. The study shows the future can predict the past and can be found here.