Signia, and its predecessor Siemens, has an impressive legacy of innovation geared toward enhancing the real-world performance of individuals with hearing loss.

In this episode of This Week in Hearing, Bob and Brian recap many of the key milestones in the history of hearing aid technology over the past 75 years.

Full Episode Transcript

Bob Traynor 0:10

Welcome to This Week in Hearing where listeners find the latest information on hearing and hearing care. Hello, I’m Bob Traynor. I’m your host for this episode of This Week in Hearing. Today, my guest is an old friend and colleague, who many of you know, as a fellow host here at This Week in Hearing. Dr. Brian Taylor is currently the senior director of audiology at Signia Hearing, but has worn many hats over his 31 year career in our field. Thanks for being with me today, Brian, I can use all the help I can get in this host chair. And I know that, that we both kind of sat here and interacted over over the last few months.

Brian Taylor 1:03

Yeah, it’s great to be with you. And it’s nice not to have to push the record button and let somebody else do.

Bob Traynor 1:09

Well, for for our colleagues, many of us know us, but many of us don’t know us. And at this interview, Dr. Taylor has five books on everything from private practice. Although, you know, we have a little bit of a conflict on a private practice textbook because we both have one out at the same time. But that’s okay. They kind of complement each other from what we can tell.

Brian Taylor 1:36

I’d like to think so.

Bob Traynor 1:37

And but in addition to private practice, he has textbooks out in hearing aids, and marketing and counseling, and a number of other topics. He’s also run large audiology clinics. Then the main audiologist for major buying groups, as and, as many of us know, we are is one of our hosts here at This Week in Hearing. And he tells me there are some distinct chapters in hearing aid development, that are all fundamental to our everyday practice of the profession. He feels there’s some sort of stuff that goes all the way back to 1946. Oh, that’s a long ways back. And the Harvard Report and some of Carhart’s research, as well as the big transition into the digital age, where he wants to give us some updates, and some things that were specific from Villchur, and Killion and wide dynamic range compression, as well as, believe it or not, to our younger colleagues, there were days when there wasn’t multi memory, there wasn’t programmability and directional microphones were kind of a kind of a rudimentary kind of technology. And Brian’s going to update us there. Also the huge transition to receiver in the canal products. And then guess, what was one of the biggest ones was wireless connectivity that happened here a few years ago. Now, before we begin, Brian and I talked about this a little bit, and we would like to recognize the fact that Dr. Raymond Carhart was probably one of the the most progressive audiologists ever to practice the profession, as the father of our of our profession. And to, to give to give Carhart credit is for realizing that clinical audiology would be a mainstay of the audiology profession. Here’s something he wrote in 1975. Now, keep in mind, this was way before, all the sexist language and so on. Try to read through that, but listen to how he predicts where audiology was going in the mid 1970s. So here’s a quote Dr. Raymond Carhart. “The researcher can gather fact after fact, at his leisure, until he has sufficient edifice of evidence to answer his question with surety. How different is the clinicians task. He too is an investigator. But the question before him is, what can I do now about the needs of the person who’s seeking my help at this moment? The clinician proceeds to gather as much data as possible about his client as he can in a clinically reasonable period of time, he does not have the luxury to wait several months or years for other facts to appear. The decisions of the clinician are more daring than the decisions of the researcher. Because human needs that require attention today impel clinical decisions to be made more rapidly. And on a basis of evidence that do, that then do research decisions. The dedicated and conscientious clinician should bear this fact in mind proudly and as Carhart sums it up. His is the greater courage. So with that as an orientation from the father of our profession, Brian, it’s now time for your encore, which would be discussing Dr. Carhart, and his some of his research to begin.

Brian Taylor 6:06

Sure I well, I think a good way to start with Raymond Carhart is to is to say that he’s a lot more famous than just the, for his notch. I think most audiologists, with Carhart’s notch, something that you see with otosclerosis. But he was a real pioneer with respect to hearing aids. So you have a quote from 1975. I know that he did some pioneering work back in 1946, around the term ‘selective amplification’, something we kind of take for granted today, we talk about the prescriptive fitting methods, the NAL the DSL, every manufacturer has a First Fit formula. They’re all, they all are really based on this concept of selective amplification, which essentially is – the gain mirrors, inversely mirrors, I guess, the audiogram. So the greater the hearing loss at a particular threshold, the more gain and DSL, NAL and many, many others that go back to the 1940s. And probably before that called for different amounts of gain. But it’s all predicated around this idea of mirroring the Audiogram and selective amplification. And actually, there’s a really, I think, excellent summary of this that was written by another father, the father of the American Academy of Audiology, that’s Dr. James Jerger. He wrote a really interesting piece in the Hearing Review about three, I want to say three years ago, I think it was in 2018. That talked about this important year 1946, where Carhart kind of introduced this concept of selective amplification. And it was, he introduced the concept of selective amplification, kind of in the face of what some people know, is the Harvard Report, which actually, I think was created by a bunch of researchers at the psycho acoustic laboratory in Harvard, at Harvard University, and in conjunction with some researchers at Central Institute for the Deaf in St. Louis, which basically said, you know, provide all people with a hearing loss, mild, moderate, maybe moderate, severe, with the same gain and frequency response, a flat frequency response, kind of a one size fits all approach works well for everybody. And that was the consensus in 1946. And Carhart, I think kind of stood up and said, ‘Well, my research says something a little different’. You need to mirror the audiogram, provide more gain in the highs, assuming there’s more hearing loss in the highs. And like I said, all these prescriptive formulas kind of arose from that concept. So that’s the importance of Raymond Carhart, and selective amplification.

You know, I think if you, if you then kind of go from there, you know, maybe move the clock ahead another 20 years or so, to the 1970s. I think you can split the hearing a world into probably four or five distinct eras. A lot of our listeners or viewers probably aren’t familiar with a really important audio engineer by the name of Edgar Villchur. He did some pioneering work in the area of ported speakers. If you like to listen to music, on a small speaker with good sound quality, that kind of speaker was actually invented, or at least one of the inventors was Edgar Villchur. He should probably be more well known in audiology than he is. He is one of the inventors of wide dynamic range compression, applying more gain for soft sound to kind of mimic the function of normal cochlea, less gain as input levels increase. He did that work in the early 70s. And that was included in hearing aids starting with ReSound about 10 years later. And now I think fundamentally, all digital hearing aids have some form of wide dynamic range compression (WDRC). And other work. Another important person, I think most most of our viewers probably know, that fits into this era of WDRC in the 70s and 80s is Mead Killion, the inventor of the K-amp, similar concept, they’re less gain, as is applied as input levels are increased. So that’s kind of the first era I think of the modern hearing aid is around WDRC, you could also probably throw in there directional microphone technology, I think directional microphone technology. It’s been around for an awful long time, 1960s at least. But it didn’t become really commercially viable until probably the late 80s and early 90s. That had some issues with noise floor and stuff like that being too much too loud. But some of our viewers might remember the Audio Zoom from Phonak that was one of the first in my opinion, really good directional microphone systems in the I guess, early 90s. There were some others as well. But that’s kind of the first era of modern hearing aids. And then that takes us into maybe the mid 90s, when digital signal processing kind of became the rage, the era of digital hearing aids, mid 90s, through the early 2000s. And of course with that, you get all kinds of programmability. You throw away your screwdriver, make the adjustments with your software. So there’s a level of precision. And, you know, I think most of us would agree digital hearing aids back then didn’t have very good sound quality. But man have they improved over the last couple of decades, we can fit a much greater range of hearing losses, sound quality’s better noise floors, better flexibility, all kinds of things you can do, then I think we move into the era of receiver in the canal technology, individuals like Natan Bauman, who kind of put that on the map. You know, that opened up a lot of possibilities around smaller, smaller devices, more comfortable, more, more instant fit kinds of devices. So there’s all kinds of I think advantages to the where around RIC’s that moves us into the era of wireless connectivity, where you see the hearing aid starting to become paired with smartphone technology, Bluetooth,

Bluetooth connectivity, the ability to improve the signal to noise ratio, streamed directly from a phone, from a computer from a TV that has all kinds of advantages. And now I think we’re moving into we’re kind of leaving that era, not that it still obviously, wireless connectivity is important. And it’s still probably some areas there that need to be enhanced. But we’re moving into a new era, which I kind of call the era of artificial intelligence and machine learning. And even though that kind of AI and machine learning have been around for a long time in hearing aids, I mean, in the Signia world, we’ve had trainable hearing aids for about 15 years. And that’s the type of machine learning. But I think it’s really starting to become more sophisticated, more user friendly. And what AI and machine learning, I think really allow is for self programming, self adjustment, self fitting devices, it puts more control, meaningful control in the hands of the wearer, where they can access their hearing aid through a smartphone app and make all kinds of really, you know, individualized adjustments if they want to do that. So that’s kind of how I think of the modern hearing aid world, those four or five distinct eras, since the 1970s. So lots of you know, it’s amazing. And you can look at the market track data on this, I think, to hearing aids satisfaction 30 years ago was around 60, maybe 65%. And the last market track published a year or two ago MarkeTrak 10 overall satisfaction was in the mid to upper 80s. So that kind of shows you you know, a combination of, of better technology, incrementally improving technology and also the skill of the hearing care professional, how they go kind of go hand in hand to drive that number. So, I don’t know Bob, did you have any feedback on my long winded history of hearing aids?

Bob Traynor 14:53

No, actually, ah, all that stuff is great reminders. And I know you I remember when we used screwdrivers, but there’s a whole lot of colleagues out there these days who don’t ever remember using a hearing aid screwdriver. And I mean, that’s how I started wearing glasses as my office manager. One day, I was trying to try to turn the screw on an output control. And I was having a hard time getting the screwdriver in there. She says “why don’t you get yourself some damn glasses?” Oh, yeah, that’s probably a good idea. So, so the idea is that there’s been a whole lot of changes as as all of us have progressed in our career. And, and now you have some speculations as to the kinds of things that are going to happen in the next few years for our for our new colleagues that are out basically becoming Carhart’s clinicians as he presented this long ago.

Brian Taylor 16:02

Well, I think the the field of auditory science to pay attention to right now is auditory scene analysis. It’s a field of auditory science. That’s been around for a while. But I think you’re going to start to see hearing aids that more closely mimic what auditory scene analysis experts have been telling us about how we hear and how we understand in complex listening situations. You know, I think most of our viewers in audiology are probably familiar with how the auditory system works in a top down and a bottom up manner. Auditory scene analysis is a big, is really the underpinning under the theory that underpins that. It talks about how we, how people search process information. And I think you’re going to start to see hearing aids that kind of mimic that automated auditory scene analysis process that we all innately have, which is pretty exciting.

Bob Traynor 17:13

So you see some of this stuff transitioning into the, into the over the counter products, as well as into self fittings of DTC, Direct to Consumer and those kinds of things as well.

Brian Taylor 17:27

Yeah, well, I think that one of the really cool things about machine learning is that you can take the you can take data from 1000s of other fittings that are held up in the cloud held there, you know, anonymized data, you don’t know the name or the person, but you know, that, you know, maybe their audiogram, and, you know, their fitting parameters with their using, and a person with with an app can interact with those 1000s of data points, see what those people have done in certain situations, let’s say a noisy environment, a cocktail party, they don’t want to, they want to maybe tweak the hearing aid. Well, maybe rather than going to their audiologist to have the audiologist, you know, based on all their experience, make adjustments to the hearing aid, they can take those 1000s of data points from similar wares. And it’s all combined into one spot, takes the average, and gives the patient a couple of options as far as how they might want to adjust their own hearing aid based on how those other 1000s of people around the world have adjusted their hearing aid in a similar situation. So that’s an exciting possibility for and of course, not all hearing aid wearers probably want that. But for those that want to take the time to individualize the fitting on their own, I think that’s a pretty powerful tool that they would have just on their phone.

Bob Traynor 18:54

So now does has Signia, does Signia have some kinds of technologies that fit into some of the parameters that we’ve been, we’ve been discussing here.

Brian Taylor 19:08

Signia has a feature called Signia Assistant that does pretty much what I just described, it allows the patient to make adjustments based on 1000s of other similar wearers when they’ve been in that same situation. It’s, it’s something that the provider has to enable. So the patient just doesn’t do this on their own. Their provider has to be involved in the process of kind of turning the controls over to the patient. And inside that app, there’s some it’s, it’s locked down to a certain extent. So they they can only adjust to a certain amount. It doesn’t give them complete control. Like that would be too unwieldy for anybody, but it gives them some options to choose from, sort of off a menu when they go into certain places that are notorious for needing adjustments, they can look what other people have done, based and then make an adjustment on their device based on that other information.

Bob Traynor 20:11

Isn’t it interesting that, you know, back when when I started an audiology, it was a little bit kind of like, “Hi, how does that sound?” And guess what? Here we are 40 some years later. And we’re now back to can you tell me how that sounds? A little bit of coming full circle to some degree.

Brian Taylor 20:35

You’re right about that I think that’s really an interesting comment. Because, you know, there’s nothing wrong with going to see the provider, the audiologist or hearing care professional to make those adjustments, and then they have tons of experience. I’ve seen lots of patients. But the advantage, that’s one person’s opinion, basically, albeit an expert opinion, but when you can take 1000s of data points from, you know, literally dozens or hundreds of wearers that are very similar to you, the individual. I think that takes it to another level of precision.

Bob Traynor 21:08

It does, and well, I guess I’m we’re kind of at a point where, where we pretty well, we’ve discussed hearing aids all the way back from 1946 until now, and in in a relatively short period of time. So those of you who were looking for a summary of hearing aids from this period, guess what, it’s right here. And so I really want to thank Brian, for being with me today, at This Week in Hearing. And, and it’s always good to, to have a colleague that we can kind of go back and forth with a little bit and you’re not worried about oh, gee, did I say the right thing, or did I not say the right thing? And maybe I didn’t, but that’s okay. Because we could always laugh about it later. But thanks so much for being with me today, Brian, on This Week in Hearing.

Brian Taylor 22:04

Yeah my pleasure, Bob. Thanks for having me.

In addition to their conversation, there were some important points Bob and Brian want TWIH viewers to consider. They are summarized below.

Hearing Aid Use Rates and Benefits

There is a relationship between wearer rates and overall benefit. That is, the more hours per day a person wears their hearing aids, the more likely they are to experience optimal benefit in real world listening situations. Hearing aid design is important variability that contributes to daily wear rates. By providing a variety of fashionable form factors, including Active, Styletto and Insio, Signia closes the gap on the negative stigma associated with hearing aids, which is believed to bolster daily wear rates

An under-appreciated, yet critical component related to improved hearing in noise is the ability of the hearing aid to optimize audibility. This requires a robust feedback cancellation system that expands the fitting range and provides audibility for a range of inputs without feedback. Independent research indicates Signia has one of the best feedback cancellers on the market.

In areas of relatively high noise that remains stationary relative to the wearer, Signia’s bilateral beamforming system has been shown to outperform other similar systems – even in adults with normal hearing. Additionally, in areas of complex noise in which speech-like noise surrounds the wearer, Signia’s AX Augmented Focus split-processing yields a significant benefit relative to competitors. It’s worth going into some details on how AX’s Augmented Focus technology is unlike any other hearing aid on the market today.

Let’s start with a universal feature found on all modern hearing aids: Signal classification systems. A key component of AX’s split processing is the analysis of the listening soundscape by the hearing aid’s signal classifier. Traditional classifiers use modulation and intensity level-based approaches to determine the type of listening environment of the wearer. Basically, hearing aids use a few select acoustic parameters to designate the soundscape into a limited number of classification buckets. Once in these classification buckets, appropriate algorithms such as compression, noise reduction and gain can be applied to sound inputs. The obvious limitation of this “bucket” or destination approach is that some soundscapes are extremely complex and cannot be accurately classified by most devices’ on-board classification system. AX is different.

AX’s split processing takes the intensity level and modulation into account in identifying the acoustic environment. In addition, however, other acoustic factors assist in evaluating the wearer’s soundscape, including the signal-to-noise ratio, if the wearer is talking, overall ambient modulation of the environment, and if the hearing aid wearer is stationary or in motion. These additional factors allow for a more dynamic approach to managing the soundscape beyond a limited number of classification categories found in most other hearing aids.

Analyzing the soundscape is merely the first step in helping the wearer hear well in any listening environment. The next step is how the hearing aid processes and manages the listening situation. Like other modern hearing aids, AX’s split processor logically applies select algorithms for the listening environment as it identifies the situation. However, AX also takes two distinct approaches in how the processing is executed – this is where the split processing name comes into play.

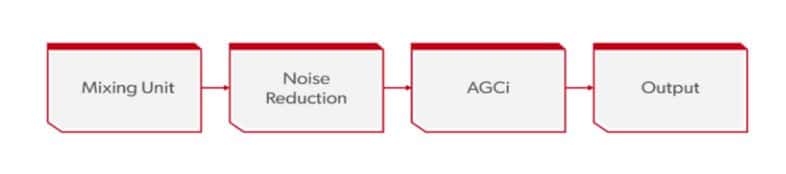

The first key difference between AX’s split processing and other devices on the market is the implementation of serial processing. With traditional approaches to amplification, the sound is processed in a serial, or step-by-step manner. For example, as shown in Figure 1, the digital noise reduction algorithm may be applied to the input signal first, followed by compression. This serial processing could result in some algorithms undoing or exacerbating effects of the other algorithms and could consequently introduce artifacts.

Figure 1. The serial processing pathway of sound, found in all hearing devices except Signia’s AX.

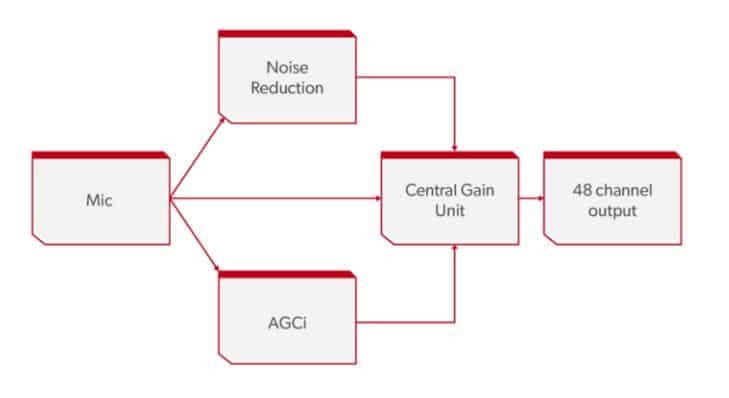

In contrast, AX’s split processing applies parallel processing, illustrated in Figure 2. The signal is fed to each algorithm in a parallel fashion, so it is untouched artifacts that could result from the amplification when processed serially.

Following the application of the parallel processing, a central gain unit brings the entire signal together without artifacts, which can result in the perception of poor sound quality. The result of parallel processing is a cleaner signal for the hearing aid wearer.

Figure 2. The parallel pathway of incoming sound in the AX platform. Note how the incoming signal is split compared to serial processing in Figure 1.

Along with the parallel processing, AX’s split processing utilizes another innovative approach designed to address a common issue associated with hearing aids and one we’ve already mentioned: maximizing speech intelligibility in background noise.

AX’s split processing implements directional microphones are part of a sophisticated amplification strategy. In a traditional approach, all input signals processed by the hearing aids would follow similar noise reduction and compression characteristics. That is, no matter if the input is speech, music, or stationary noise like a fan, all the sounds are processed in essentially the same way — the input signal dominating the wearer’s soundscape would drive classification and compression characteristics. With directional microphones, this also meant that sounds from the rear direction would receive some additional attenuation as the sound was processed. However, the same overall noise reduction and compression characteristics would be applied regardless of the direction of a sound source.

With AX’s split processing, the directional microphones are used to identify front and rear input signals as separate streams and consequently apply separate processing to each stream. This means that target sounds from the front, which are typically speech, are likely to receive compression and noise reduction better suited to maximize the clearness of the speech signal. The competing sounds from the rear field apply different noise reduction and compression characteristics. Applying different processing to each of the two streams helps the wearer more easily distinguish the target speech signal from the competing background signals.

For more details on Signia’s Augmented Focus and clinical studies supporting its effectiveness, please contact Brian Taylor at [email protected]

About the Panel

Robert M. Traynor, Ed.D., is a hearing industry consultant, trainer, professor, conference speaker, practice manager and author. He has decades of experience teaching courses and training clinicians within the field of audiology with specific emphasis in hearing and tinnitus rehabilitation. He serves as Adjunct Faculty in Audiology at the University of Florida, University of Northern Colorado, University of Colorado and The University of Arkansas for Medical Sciences.

Robert M. Traynor, Ed.D., is a hearing industry consultant, trainer, professor, conference speaker, practice manager and author. He has decades of experience teaching courses and training clinicians within the field of audiology with specific emphasis in hearing and tinnitus rehabilitation. He serves as Adjunct Faculty in Audiology at the University of Florida, University of Northern Colorado, University of Colorado and The University of Arkansas for Medical Sciences.

Brian Taylor, AuD, is the senior director of audiology for Signia. He is also the editor of Audiology Practices, a quarterly journal of the Academy of Doctors of Audiology, editor-at-large for Hearing Health and Technology Matters and adjunct instructor at the University of Wisconsin.

Brian Taylor, AuD, is the senior director of audiology for Signia. He is also the editor of Audiology Practices, a quarterly journal of the Academy of Doctors of Audiology, editor-at-large for Hearing Health and Technology Matters and adjunct instructor at the University of Wisconsin.