Hearing Through the Eyes – This Does Sound Weird

You’ve Got to be Kidding, Right?

Can a person actually hear through their eyes? Color me among those shaking their head, seriously questioning if I had read, and heard, this correctly. But, such was suggested by the title of a presentation I attended at the American Auditory Society meeting in Scottsdale, AZ in March of this year. The title was “The Retina: A New Pathway for Hearing.” I was convinced that I had to listen to this presentation, because if this was true, then could it be applied in other ways, such as my wife knowing what I was thinking by just looking at me? I could just envision ahead, trouble in River City.

Aside from the attempts at humor in the opening paragraph, factual material was provided by the co-authors of the presentation, Paul Paulson and Chris Ruckman.This post is based on the AAS presentation, but revised to this readable document (with their permission).

Aside from the attempts at humor in the opening paragraph, factual material was provided by the co-authors of the presentation, Paul Paulson and Chris Ruckman.This post is based on the AAS presentation, but revised to this readable document (with their permission).

This post is merely to present the background for this rather unusual topic, at least unusual for many in attendance at the meeting, and especially for those who accepted the title at face value without recognizing the underlying science. And, from the questionable stares of the audience, and the lack of questions, I assumed that some were attempting to find a way to decipher the material – like some mysterious puzzle that they could not piece together. In all fairness, only twenty minutes was allowed for the presentation, so more complete background was necessarily left out. And, while many are familiar with the various roles vision plays in communication, this one needed a way to get arms wrapped around it. That the presentation was from a rather non-traditional and “outside” source (Temeku Technologies, Inc., Herndon, VA), seemed to both intrigue and caution attendees. (Temeku is a business that specializes in the development and integration of low observable (LO) technologies, material sciences, naval architecture, lightweight and composite structures, electromagnetic systems, and systems engineering).

LO Technology Does Not Mean Hearing Trumpets

LO technology is also termed stealth technology, and is a sub-discipline of military tactics and passive electronic countermeasures, which cover a range of techniques used with personnel, aircraft, ships, submarines, missiles, and satellites to make them less visible to radar, infrared, sonar, and other detection methods. It corresponds to camouflage for these parts of the electromagnetic spectrum. This sounds impressive, and even intriguing, but how does this all relate to hearing with the retina?

Underlying Premise

The basic premise of the presentation was that hearing is not accomplished in our ears, but in our brain. The end organ (cochlea) is primarily a low data rate transducer converting sound waves into electrical nerve stimulation to reach the brain. It is the brain that processes the stimulation to create hearing.

While hearing is always accomplished only in the brain, there are other ways that the ear transducer can detect sound and send signals along nerves to the brain. For example, cochlear implants convert sound into electric signals that stimulate auditory nerves (if they are operational) to produce signals that reach the brain. Also, some synethetes have been said to hear sounds in response to olfactory nerve stimulation (smell).

For those not familiar, synesthesia is loosely defined as “senses coming together,” which is just a translation of the Greek (etymology: syn – together, esthesia from aesthesis – sensation). At its simplest level, synesthesia means that when a certain sense or part of a sense is activated, another unrelated sense or part of a sense is activated concurrently. For example, when a person hears a sound, he/she immediately sees a color or shape in their “mind’s eye.” People that have synesthesia are called synethetes.

Star Wars Approach

The retina technology being envisioned converts sound into stimulations that reach the brain by a unique way of interacting with high data rate visual nerves, yet without physically touching them.

The technology is intended to send sound along visual pathways, doing this with no drugs, surgeries, eyesight interference, or special training. A general conceptual visualization of the technology is shown in Figure 1.

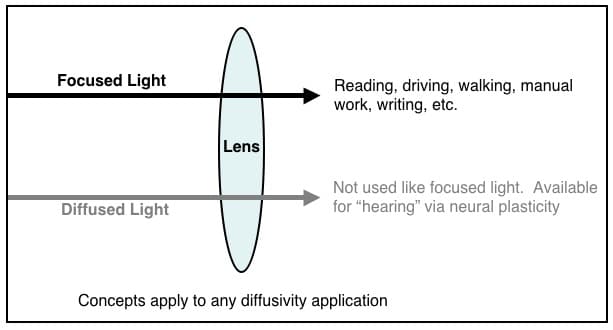

Figure 1. Using a lens as an example, focused light is the primary image passed through the lens. Diffused light also passes through the lens, but could be used for “hearing,” via neural plasticity, or for any of the other senses.

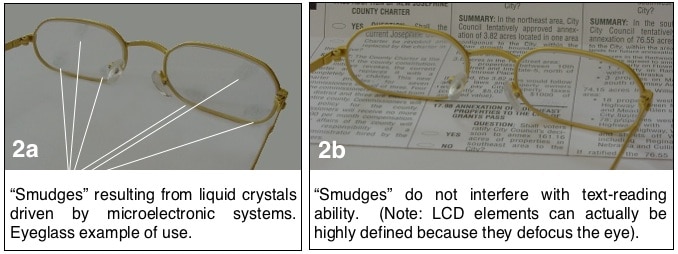

An example of this concept is provided in Figure 2. An eyeglass lens that has “smudges” on it is seen in 2a. The “smudges: might be analogous to fingerprints on a lens, but which do not interfere with seeing or reading (2b). These “smudges” are actually liquid crystals on the lens, and are activated by the microelectronic system, transmitting second sense information. In the proposed development, sound would be transmitted in some kind of visual pattern onto the eyeglass lenses, stimulating the visual sense subliminally. The person looking through the glasses would not see a distorted view – it would appear normal.

Modern displays strive to present a viewer with the sharpest possible text and graphics. However, sharp imagery is not the only option for addressing the visual processing capability of human brains. Human visual processing is also able to notice and interpret peripheral stimuli outside central vision, as well as diffuse stimuli overlaid onto the field of central vision. Information imparted along these routes can be interpreted separately from the brain’s focused-image processing, thereby offering a path to increase human cognitive capacity. This potential has gone unutilized because the brain has not been trained to use these stimuli with displays, though these forms of stimulation are part of everyday life. But the fact remains: brains can be trained with repetition to beneficially utilize these stimuli.

Figure 2. An example of eyeglasses, used for visual stimulation, but with liquid crystals at different locations on the lenses that respond to, and transmit information from another sense.

The Science Behind the Concept is Based on Physiology

How We Learn to Hear

Sight and sound neurons experience life together. This is evidenced in that images and sounds are often experienced simultaneously, and chemical changes occur that make them connect with each other, effectively wiring them together. When that happens, they fire simultaneously, and summarized by neuroscientist Carla Shatz {{1}}[[1]] Shatz, C. Quote from: Doidge, N, (2007) The Brain That Changes Itself, Chapter 3, p. 63[[1]], “Neurons that fire together wire together.” Or, contrarily, “Neurons out of sync fail to link.” These comments relate to ideas of modern neuroscience plasticity. Merzenich extended this to brain mapping{{2}}[[2]]Doidge, N, (2007) The Brain That Changes Itself, Chapter 3, p. 64[[2]].

Technical Viability – Human Brain Plasticity

In the example of Figure 2, the technology synchronizes focused vision with diffuse vision, where the diffuse vision is synchronized with sound as detected with a microphone, processed, and displayed onto eyeglasses, or other visual modalities, such as a computer screen. The different ways in which these senses interact follows:

- It is known that humans have cognitive reserve, shifting processing areas from one lobe to another{{3}}[[3]]Doidge, N. (2007). The Brain That Changes Itself, Penguin Books, p. 253[[3]].

- Additionally, Merzenich, reported that brain maps of normal body parts change every few weeks. He goes on to say that:

“…all our sense receptors translate different kinds of energy from the external world, no matter what the source, into electrical patterns that are sent down our nerves. These electrical patterns are the universal language “spoken” inside the brain – there are no visual images, sounds, smells, or feelings moving inside our neurons.”

- A large body of evidence indicates that the brain demonstrates both motor and sensory plasticity{{4}}[[4]]Bach-y-Rita, A woman perpetually falling, The Brain That Changes Itself, Penguin Books, p. 19, (2007)[[4]].

- DARPA (Defense Advanced Research Projects Agency) has sponsored work where blind persons see by a video camera that stimulates the tongue nerves with a thin plastic electrode placed on it{{5}}[[5]]Bach-y-Rita, P., Kaczmarek, K., and Tyler, M. Part II: Virtual Environments, 8, a tongue-based tactile display for portrayal of environmental characteristics, Virtual and Adaptive Environments: Applications, Implications, and Human Performance Issues, 2003, Lawrence Erlbaum Assoc., Inc., Publisher, Mahwah[[5]].

- Helen Neville and Donald Lawson{{6}}[[6]] Neville, H., Lawson, D. The culturally modified brain, in The Brain That Changes Itself: Stories of Personal Triumph From the Frontiers of Brain Science, 2007, p. 295[[6]] found that deaf people intensify their peripheral vision and move the associated processing to a different location than used by hearing persons. A loss in one brain module leads to structural and functional changes in another.

- As long ago as the 1820s, Fluorens {{7}}[[7]] Fluorens, P. Review of F. J. Gall, p. 299, 1820, https://www.bcs.rochester.edu/courses/crsinf/240/notes05.pdf[[7]] showed the brain can reorganize itself. By removing large portions of a bird’s brain, mental functions were lost. But, he found that after a year many of the functions returned, evidence that remaining parts of the brain took over the lost functions.

- Supportive evidence for viability of the technology concept continues to grow.

Sensory Compensation

It is know that there is a cross-link between hearing and vision. Blind individuals have been reported to have an extension of the vision neurons into the brain areas normally reserved for sound. Rauschecker and Korte{{8}}[[8]] Rauschecker, J.P., and Korte, M. (1993). Auditory compensation for early blindness in cat cerebral cortex, The Journal of Neuroscience, Oct. 13(10: 4538-4548, Laboratory of Neurophysiology, National Institute of Mental Health[[8]] commented as follows:

“… blindness causes compensatory increases in the amount of auditory cortical representation, possibly by an expansion of nonvisual areas into previously visual territory. In particular, they provide evidence for the existence of neural mechanisms for intermodal compensatory plasticity in the cerebral cortex of young animals. The changes described here may also provide the neural basis for a behavioral compensation for early blindness described elsewhere.”

Synesthesia

Synesthesia is another evidence of cross-linking between the senses. Synesthesia is characterized by excitation of a sensory pathway followed by involuntary activation of additional sensory pathways. Synethetes may hear sounds in response to smell, see colors when they hear words, or taste shapes. Any of the senses can involve synesthesia.

Subliminal Visual Effects

Subliminal visual effects are sensory stimuli below an individual’s threshold for conscious perception. Visual stimuli may be quickly flashed before an individual can process them, or flashed and then masked, thereby interrupting the processing. Subliminal stimuli activate specific regions of the brain despite participants being unaware.

Working with a considerably smaller number of training events than characterized by the technology suggested in this post, Watanabe, et. al.{{9}}[[9]] Watanabe, T., Nanez, J. E. & Sasaki, Y. Perceptual learning without perception. Nature 413, 844 – 848 (2001)[[9]] have shown that subliminal training is a realized process. In their study, they found that subliminal motion of dots in a background of a focused task (task of reading letters) leads to a significantly enhanced ability of a person to consciously respond to such movements when encountered later.

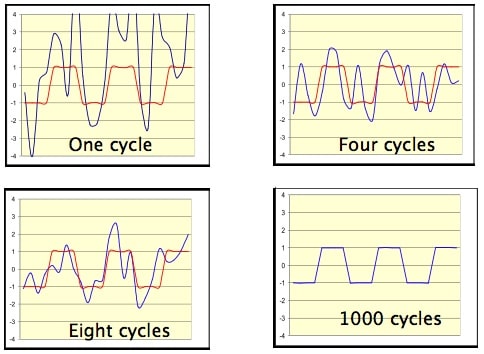

Figure 3. In this example, repetition of an audio experience extracts the meaning of the diffuse imprint from “noise.”

Repetition

For example, repetition of an audio experience extracts the meaning of the diffuse imprint from “Noise” (Figure 3).

Resolution Requirements

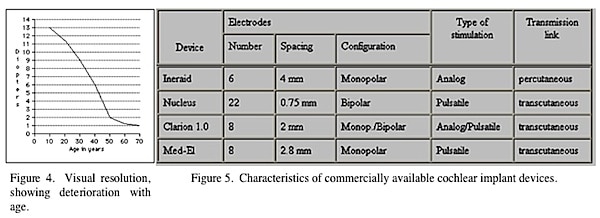

Visual: Resolution requirements are low, and human accommodation of focus deteriorates with age, as seen in Figure 4.

Hearing: Sound frequency resolution requirements are low also as shown in Figure 5. As few as 6, and as many as 22, frequency bins are used in cochlear implant devices. Applying optical technology that can easily provide more than 25 frequency bins to manage sound frequency resolution seems reasonable.

Hardware For Hearing With the Retina

The necessary hardware building-block technologies already exist. These consist of:

- Eyeglass technology and materials

- Microphones

- Analyzing electronics (frequency separation, phoneme recognition, etc.)

- Segment drivers

What is still needed is research to determine the best combinations of:

- Contrast

- Frequency selections

- Diffuse image placements

- Phonemes vs frequencies

- Peripheral vision regions

- And more

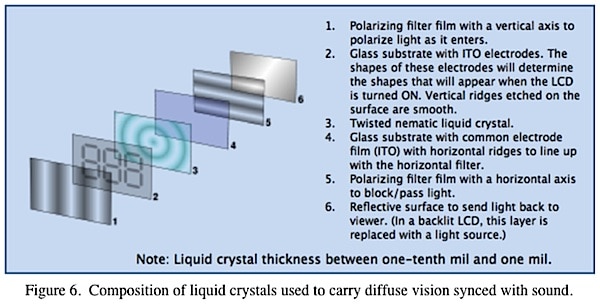

Liquid Crystals

- These need not destroy clarity of vision and function with low voltage and high speed.

- Hundreds of thousands of individually controlled areas in the size of an eyeglass lens is routine and inexpensive.

- Figure 6 shows the composition of liquid crystals used in this work.

Take Home

The authors of this presentation given at the American Auditory Society Scientific and Technology Meeting in Scottsdale, AZ in March state the following as goals of hearing with the retina. They believe these not only to be laudable, but also attainable:

- Might this provide new life for the billion hearing impaired persons?

- Might this allow deaf children to have a normal hearing person’s conversation?

- Might this allow for seeing and hearing at the same time, just as with normal visual and hearing persons?