Part II of a Series on the Use of Two Ears for Hearing

In a recent post, I wrote about hearing with two ears, and listed the following among the major phenomena involved:

- Head shadow effect

- Sound localization

- Loudness squelch

- Facilitation in noise (masking level difference)

- Binaural summation

Collectively, these phenomena result in at least the following practical advantages of binaural hearing:

- Improvement in the intelligibility of speech under noise conditions,

- The reduction of unpleasant background noise (Koenig effect),

- Enhancement in the ability to localize sound, and

- Elimination of the head-shadow effect that occurs when the head is positioned between the sound source and the aided ear.

The post referenced on binaural hearing provided information about the head shadow effect and how it could either assist or reduce intelligibility by the way it affected the signal-to-noise ratio of sounds, primarily speech, arriving at the ears. The reader will find that head shadow is involved in sound localization as well, and supports the use of two ears for listening. This post will continue the discussion of hearing with two ears by focusing on sound localization.

Sound Localization Happenings

- When a sound is perceived, we often simultaneously perceive the location of that sound.

- Interestingly, a given sound contains absolutely no physical property that designates its location.

- Therefore, the ability to localize sound must be caused entirely by neural events since we cannot pick up positional cues from the stimulus itself.

A Listener Is More Comfortable When the Speaker Can be Located Accurately

It is well known that a listener is more comfortable when the speaker can be located accurately. A 1958 article by Dr. Raymond Carhart described the overall effect well when he stated:

“The world of sound assumes full three dimensionality only when the capacity for spatial localization can be used to its fullest. Normal ability to distinguish sounds of biological and social importance in unfavorable listening situations requires that two independent sequences of neural events (time of arrival and intensity – author addition), one from each inner ear, activate the central nervous system. Under such circumstances sources of sound are more effectively localized than when a single train of neural events is present or when two trains are identical.”

“When independent sequences of neural events are present, the listener can distinguish more effectively among competing sounds arising from different points in his environment. This capacity allows him to hold his attention on the stimulus which is at the moment important even though the situation is, acoustically, quite chaotic.”

Our ability to determine where sounds come from is due to the fact that humans possess two ears separated in space. If one ear is lost, sound localization is severely degraded.

Localization or Lateralization?

It is common to see these two words used, combined, and confused when describing what is happening at the ears with two-eared hearing.

Localization is commonly used to describe the directional effect (where sound is coming from) of a stereophonic sound image; lateralization, on the other hand, refers to an image occurring with headphones. The primary distinction is that in lateralization, the binaural fused image under headphone listening is internalized, meaning that it is heard either in or near the head. The most prominent effect of this internally fused image is its apparent bilateral position, or hearing the sound in both ears. Localization, on the other hand, exists in free space, where the sound is heard externally, and all three spatial coordinates (time, intensity, and phase) are used to specify the location of the external sound image. The impact of head shadow enters into localization as well and was covered in a previous post related to binaural hearing.

Point of Origin Location of Sound

Fletcher (1929) demonstrated that binaural hearing has always been with us, and its importance lies mainly in the fact that it enables us to pinpoint a sound source at its point of origin. Even primitive auditory systems need to inform their owners of what is threatening and where the threat comes from. This dictates the direction for visual contact and offers directions for flight.

Von Békésy (1960) stated that the binaural phenomenon, especially dealing with localization, is incredibly complex. He added that in no other field of science does a stimulus produce so many different sensations as in the area of directional hearing. So, is it any wonder that hearing aids find it difficult to manage localization, especially when the sound source is what might be called “far,” rather than “near”?

Localization depends on two major features:

- The intensity of the signal,

- The times at which each ear receives corresponding portions of the sound waves (interaural time of arrival differences),

Some sources list phase (period-related time) as being a third factor, while other sources identify that phase change of the signal as a by-product of the time of arrival of the signal at the two ears. Regardless, a phase change occurs as the signal passes from one ear to the other in time.

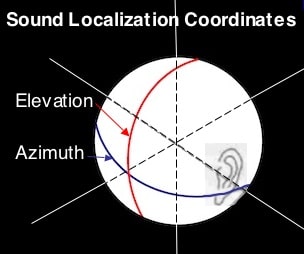

Figure 1. Two coordinates used by psychophysicists to determine the location of sound sources. The “azimuth” is used for sounds arriving in the horizontal plane, and “elevation” is used for sounds arriving in the vertical plane.

Sound Localization

Localization is described by psychophysicists using two coordinates, one for azimuth (horizontal plane) and one for elevation (vertical plane) (Figure 1). For human hearing, localization occurs primarily via the horizontal plane (azimuth), and, because of this, little discussion will be made of vertical localization.

Interaural Intensity Differences

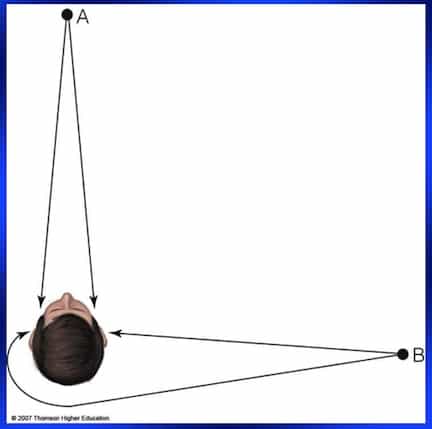

Figure 2. Interaural intensity difference (ITD) effects as a result of the signal angle of incidence and the impact of the head shadow. Sound from point A is heard in both ears at the same time and no interaural intensity difference occurs between the ears. When sound is from point B, the head shadow causes the sound in the far ear to be heard with less intensity, especially for the high frequencies. (Illustration ©2007 Thomson higher education).

Sound coming from directly in front (point A in Figure 2) will be the same in both ears (assuming symmetrical hearing sensitivity). However, if the sound comes from somewhat to the right (point B) or left (just reverse this), the sound will be slightly louder in the ear closest to the sound (near ear). This is due to the sound’s direct path being blocked by the head (head shadow). In the real world, listeners rely on those stimuli arriving first at the ears to determine the direction of a sound source, primarily because “first of arrival” signals carry the greatest loudness/intensity. The difference between the relative loudness of sound reaching the two ears is called Interaural Loudness Difference or Interaural Intensity Difference (ILD or IID). The IID is directly related to the head shadow effect discussed in a previous post. The interaural time of arrival differences are thought to play a major role in how a listener determines how far right or left a sound source may be.

The head shadow effect refers to the absorption of some of a sound’s energy by the head itself, causing an interaural sound level difference, which corresponds to the difference of the intensity of the sound at each ear.

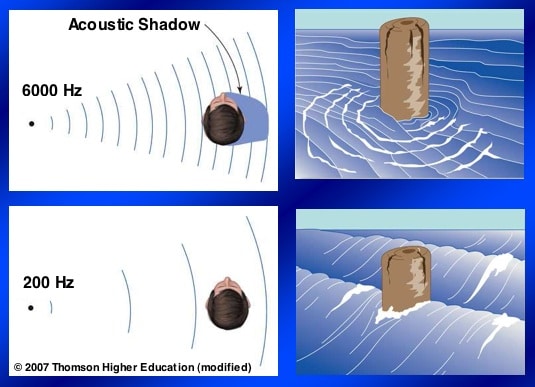

Figure 3. Head shadow effect is primarily active for high-frequency sounds that have short wavelengths and meet resistance and absorbance when encountering the head. Low-frequency sounds have long wavelengths, are able to “bend” around the head, and therefore have no significant interaural intensity differences.

However, the amount of absorption (or diffracted signal) is highly dependent on the frequency of the sound (Figure 3). When the IID is low in frequency (essentially lower than 1500 Hz), the IID is almost non-existent, having no head shadow effect. On the other hand, for frequencies above 1500 Hz, the IID created by the head shadow is active and is a useful localization tool.

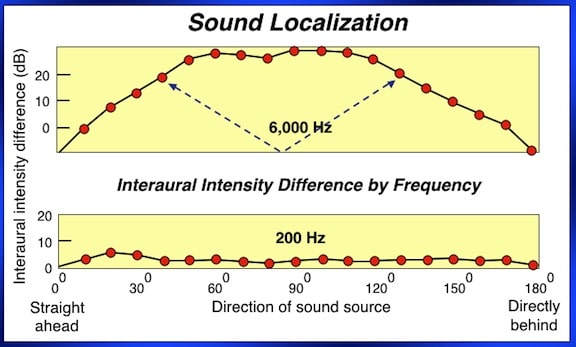

On the horizontal plane, the IID (disparity between the amount of acoustic energy that reaches the left and right ears) varies with the frequency (Figure 4). In human hearing, most sound is emitted in the horizontal plane rather than in the vertical place. For high tones (6000-Hz tone example), the acoustic energy arriving at the ears differs by 30 dB, being most prominent when the signal arrives from between about 45 and 130 degrees. For low frequencies (200-Hz tone example), little to no acoustic energy difference exists between the sound arriving at the two ears. Low-frequency sounds are less affected by the head shadow because they have long wavelengths and tend to bend around large objects, such as the head. The higher the frequency, the greater the effect of the shadow produced by the head.

Figure 4. Interaural intensity differences (IIDs), showing that at least for high-frequency sounds, intensity can be the same coming from the front or back, resulting in ambiguities as to the location of the sound (blue dashed lines showing just one example). Low frequencies have little or no IIDs. Note: humans cannot localize a 200 Hz, or other low-frequency sounds.

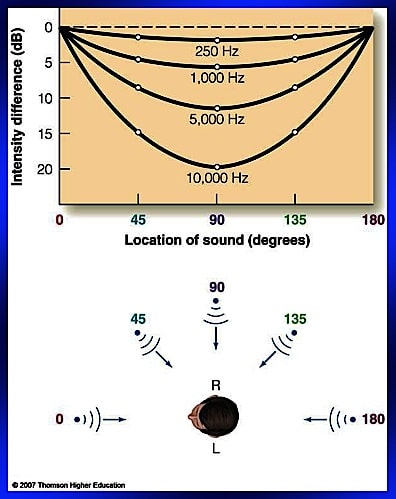

Figure 5 expands upon Figure 4 by illustrating additional frequencies and relating the interaural intensity differences (IIDs) to the location (angle in degrees) of the sound source. On the graph of Figure 5 the IID numbers are inversed, but the information is the same.

Figure 5 expands upon Figure 4 by illustrating additional frequencies and relating the interaural intensity differences (IIDs) to the location (angle in degrees) of the sound source. On the graph of Figure 5 the IID numbers are inversed, but the information is the same.

This discussion on sound localization will continue in next week’s post. The interaural time-of-arrival differences (ITDs) will be featured.

References:

- Carhart, R. (1958). The usefulness of the binaural hearing aid, J. Speech and Hear. Dis. February

- Fletcher, H. (1929). Speech and Hearing in Communication, N.Y.D, Van Nostrand

- Von Békésy, G. (1960). Experiments in Hearing, McGraw-Hill, NY

Wayne Staab, PhD, is an internationally recognized authority in hearing aids. As President of Dr. Wayne J. Staab and Associates, he is engaged in consulting, research, development, manufacturing, education, and marketing projects related to hearing. His professional career has included University teaching, hearing clinic work, hearing aid company management and sales, and extensive work with engineering in developing and bringing new technology and products to the discipline of hearing. This varied background allows him to couple manufacturing and business with the science of acoustics to bring innovative developments and insights to our discipline. Dr. Staab has authored numerous books, chapters, and articles related to hearing aids and their fitting, and is an internationally-requested presenter. He is a past President and past Executive Director of the American Auditory Society and a retired Fellow of the International Collegium of Rehabilitative Audiology.

**this piece has been updated for clarity. It originally published on February 24, 2015