Sound Localization – Time-of-Arrival Differences at the Ears

Time-of-arrival of sound at the two ears is an important contributor to sound localization. In this continuation of a series on binaural hearing, special attention is given to the second major contributor to sound localization, that of time-of-arrival of the sound at the two ears. Last week’s post on localization featured interaural intensity difference (IID) as the other major contributor to sound localization by humans. Interaural means between the ears.

Interaural Time-of-Arrival Differences (ITD)

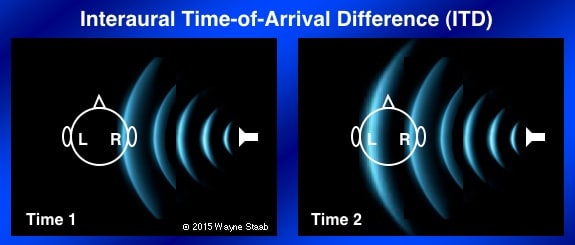

Figure 1. Sound from the front arrives at the two ears at essentially the same time and is heard with no time delay difference between the ears.

Normally, sounds generated in the environment travel through air and arrive at both ears. Sound travels quickly through the medium of air, but it takes a short amount of time to reach the ears. This time element provides useful information to help the auditory system determine where the sound originates. If the sound comes directly from the front (Figure 1), the distance to the ears is the same and the sound arrives, and is heard, at both ears at the same time. When the sound source is an equal distance from both ears, at either 00 or 1800, the ITD is equal to 0.

Figure 2. Interaural time-of-arrival difference (ITD) of a sound arriving at the two ears. In this case, the distance SL (sound left) is greater than SR (sound right), meaning that the sound waves reach the right ear (near ear) slightly sooner than for the left ear (far ear).

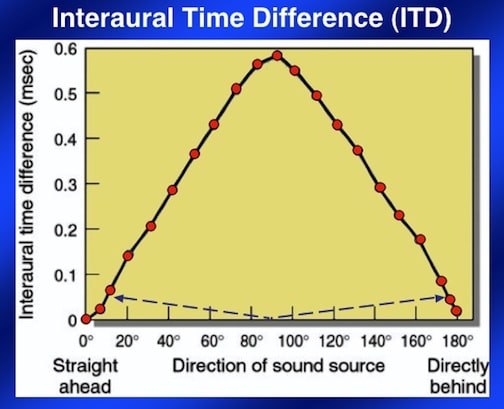

On the other hand, when the sound comes from any direction other than directly from the front or rear, a disparity between the time-of-arrival of the sound at the right and left ears occurs. The magnitude of the difference depends on the azimuth from which the sound is directed, but the interaural time-of-arrival difference (ITD) is greatest when the sound comes from 900 to one ear or the other (Figure 2). In the horizontal plane, this results in an approximate 0.6 msec delay in the signal arriving at the contralateral ear (Figure 3). The human auditory system is capable of responding to the very small ITDs that result from just a few degrees of azimuth displacement of the sound source.

Figure 3. Interaural time difference (ITD) in msec from 0 degrees through 180 degrees azimuth. The same ITD occurs for different horizontal azimuth positions around the head (blue dashed line values as examples). Note that ITDs can be the same when from the front or when from the rear, resulting in ambiguities as to where the sound is coming from, resulting in frequent front/back errors as to location.

Although the auditory system makes use of both ILDs (last week’s post) and ITDs, the latter are thought to play a more significant role in how far left or right a sound source may be. The ITD is fundamental to localizing sound sources of frequencies lower than 1500 Hz. Above 1500 Hz, the cues become ambiguous. Both abrupt-onset and low-frequency sounds give rise to an ITD because sound reaches one ear before the other.

Duplex Theory of Sound Localization

The duplex theory of sound localization was introduced by Lord Rayleigh (1877-1907), taking into account both Interaural Intensity Differences (IIDs) and Interaural Time Differences (ITDs). The duplex theory states that ITDs are used to localize low-frequency sounds and IIDs are used to localize high-frequency sounds (explained in the previous post).

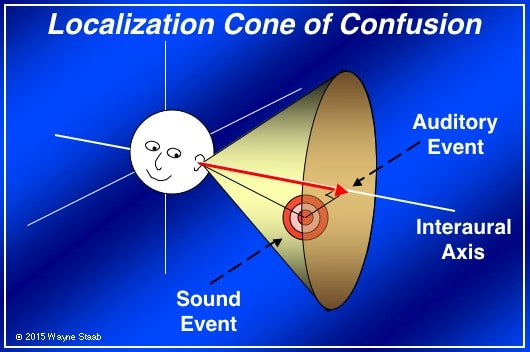

Localization for human adults is not good between 2,000 and 4,000 Hz, with poor sensitivity for both ITDs and IIDs in that range. Additionally, as shown in Figure 3 of this post and from Figure 3 of the previous post, a particular ITD and IID can arise at more than one azimuth location in space, resulting in some ambiguities, especially on front/back errors. This has led to what some have identified as the “cone of confusion” (Figure 4).

The pinna casts a shadow of sounds that originate from behind the listener. The amplitude of sounds above 2000 Hz coming from the back is about 2-3 dB lower than sounds originating from the front. This spectral difference is accepted as an additional cue to aid in both elevation judgments and in front/back discrimination.

Figure 4. Cone of confusion that can result from simple interaural cues. Fortunately, a normal functioning human auditory system can usually resolve such cone of confusion conditions.

Cone of confusion – Simple interaural cues cannot provide the information as to knowing whether a sound is from the front, back, above, or below. For example, a sound occurring at 450 to one’s left and to the front will have the same ITD as if it had occurred at a position 450 to the left and to the rear. The same holds true when applied to the right ear.

To add to the confusion, this occurs for sounds from above and below as well. In other words, a cone of confusion can occur at all positions between directly left and directly right of a listener’s head. Fortunately, a normal functioning human auditory system can usually resolve such cone of confusion conditions.

Improving Localization

Turning one’s head can help reduce localization errors. However, this activity takes about 500 msec, considerable time by neural standards. The pinna allows sound to “bounce around” before entering the auditory canal. How much activity is associated with this passive feature depends on the direction from which the sound originates, the frequencies involved, and applies primarily to vertical sound localization.

Can Localization Occur if the Two Ears are Dissimilar?

Altschuler and Comalli commented that even when the two ears remain “unequal,” yet aided, as long as the perceived sound is intense enough to stimulate both ears, the important cues for localization (time, phase and intensity) are perceived and used in a positive way.

Quick Office Test of Localization?

The following procedure was developed a number of years ago by Comalli and Altschuler. They suggested purchasing an inexpensive small loudspeaker that could be moved around the listener in a one-yard circle. With eyes closed, the listener is asked to locate the source of the sound (moving from right to left or vice versa) by pointing, or saying “right,” “left,” or “center.” Narrow bands of noise could be used as the signal source. The “center” for normal listeners is “12 o’clock” ±10 degrees.

They recommended that hearing aids having a center wider than this should be excluded in favor of those more closely approximating the “normal” standard. This might be a good test to use with some of the directional-microphone hearing aids, adaptive or fixed.

Implications for Hearing Aids

Binaural hearing aid fittings are a must for maximizing localization ability, even though hearing-impaired individuals have poorer localization ability than normal-hearing persons. Localization is poorer than their unaided localization when fitted with hearing aids and tested at MCL (most comfortable loudness).

Interestingly, localization can improve even when word recognition is poor. And, a listener is more comfortable when the speaker can be located accurately.

Perhaps, following, or concurrent with audibility, the primary goal of a hearing aid fitting should be that of providing localization. Could it be that localization, in the normal course of events, might be a more significant goal than improvement in word recognition scores? An interesting thought.

References:

- Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization. Cambridge, MA. The MIT Press, 1997

- Altschuler, M.W., and Comalli, P.C. (1979)

- Comalli, P.C., and Altschuler, M.W. (1980). Auditory localization: methods, research, application, in Binaural Hearing and Amplification, Libby (Ed.), Zenetron Inc.

- Vaillancourt V., Laroche C, Giguere C, Beaulieu M, Legault J. (2011). Evaluation of auditory functions for Royal Canadian Mounted Police Officers. J Am Acad Audiol 22(6):313-

Wayne Staab, PhD, is an internationally recognized authority in hearing aids. As President of Dr. Wayne J. Staab and Associates, he is engaged in consulting, research, development, manufacturing, education, and marketing projects related to hearing. His professional career has included University teaching, hearing clinic work, hearing aid company management and sales, and extensive work with engineering in developing and bringing new technology and products to the discipline of hearing. This varied background allows him to couple manufacturing and business with the science of acoustics to bring innovative developments and insights to our discipline. Dr. Staab has authored numerous books, chapters, and articles related to hearing aids and their fitting, and is an internationally-requested presenter. He is a past President and past Executive Director of the American Auditory Society and a retired Fellow of the International Collegium of Rehabilitative Audiology.

**this piece has been updated for clarity. It originally published on March 2, 2015

and nothing messes up localization in hearing aids more than over processed sound. Especially compression…

and nothing messes up localization more than over processed sound. Especially compression…

I have not examined this myself, but I can imagine this to be true.

eh, sorry for the double post.. Computer hiccup…

A warning is called for in cases where an audiologist is called upon to verify a patient’s localization abilities, without but especially with the use of hearing aids. There is no standard test that is available. If the patient’s ability to localize is compromised without the use of hearing aids, there is very little to no reason to suspect that the use of amplification (binaurally, of course) will alleviate this deficiency. The Source Azimuth In Noise Test (SAINT) is only administered under headphones, and, unless one uses an R-Sound system, there is no way to objectively test for “normal” or abnormal localization ability. The Hearing In Noise Test can shed some insight into the problem, and may be correlated to localization tasks, but snr tests are not the same as tests of localization. This becomes a particularly sticky problem in the testing of peace officers, firemen, and others who ought to be able to localize sound in hazardous conditions, where their safety and the safety of others may be in jeopardy. Audiologists should not attest to sound localization abilities without using objective, reliable methods for drawing such conclusions.

Excellent comments

Excellent post and comments by all.

The effects of fixed, or floating directionality, situational compression and overall ambient load levels are also factors that must be considered as well. Now, particularly, as speech recognition, location, and enhancement algorithms throughout our industry and beyond become more robust, and widespread.

I can easily visualize future systems with specific voice, or sound localization and enhancement features designed to recognize certain voices, or chosen environmental sound over others. Such systems would be capable of creating virtual microphone arrays, learning and allowing for the choice of preferences.

Such systems would not only enhance the particular spoken verse of a loved one, or chosen environmental sound over others, but also provide it in such an enhanced way so as the wearer would be provided all of the appropriately enhanced cues as to where the selected sound was coming from.

The joy, wonder, and heartache of our field is that Moore’s law waits for no protocol.

By the time one is developed, and widely accepted for verification of localization, it may well be obsolete when used to assess anything but legacy equipment.

Thanks again for the great articles and comments.

Best Wishes,

Dan……..

I was born with complete hearing loss in my left ear and perfect hearing in my right. I believe that I have a more difficult time localizing sound, especially sound coming from left or right. I can move my head to get a better idea of where sound is coming from, but I get completely lost when I’m in an environment with lots of reverberation. My good ear faces the wall when I sit at my desk (on my right). If I didn’t know that there was a wall there, I would think that people who come up and talk to me are on my right side. Little movements of my head never seems to dispel that feeling.

I was a participant in that quick office test once. The person testing me made noise as they walked around me (feet clomping on the ground and noise of clothes flapping). I could quickly tell where the sounds were coming from because of that. It’s difficult to walk around without making any sound at all. Even if they aren’t making sounds as they walk, I can pick up on the change in the background noise. I haven’t actually read that document, so I’m not sure if that is already noted.