by James W. Martin, Jr, AuD, and Oliver Townend

Artificial intelligence (AI) seems to be appearing everywhere. For example, BMW and Mercedes are pairing humans with machines to accomplish tasks in the automotive industry that in the past would have been impossible when performed by either in isolation (Daugherty, 2018). This enables flexible human-machine teams to work in a symbiotic relationship, and similar advances are now becoming a part of the hearing amplification landscape.

While AI has many applications, it can also feel like a marketing buzz-word attached to product names or descriptions. It can become difficult for hearing care professionals and, in turn, for hearing aid users to understand what an ‘AI’ tag really means or what it is doing. This article, by looking at SoundSense Learn, a direct application of machine learning found in the Widex Evoke smartphone app, aims to educate the reader briefly on AI and machine learning, what benefits it can bring, and how it can help to increase user satisfaction.

In real life, what an individual wants to hear varies depending on many factors that make up the auditory scenario at the time. They might want to focus on lowering the conversation level around them if they’re sitting by themselves in a coffee shop or, conversely, on increasing the conversation level if they are in company. If they’re listening to music, they may want to accentuate an element within the music. How, who or what an individual wants to hear is described as their listening intention.

The listening intention of hearing aid users is incredibly important. Our challenge is uncovering those intentions and helping to fulfill them through our hearing aids. Most hearing aids today are designed to address listening intentions automatically, but sometimes automation falls short of meeting the needs of hearing aid users (Townend et al., 2018).

Automation is based on algorithms and rules that are driven by certain assumptions. These assumptions are incorporated into the automatic hearing aid processing, with the aim of optimizing the user’s ability to hear in different listening environments. Automatic adaptive systems have additional advantages such as freeing cognitive resources to focus on listening (Kuk, 2017). Using this assumption-based approach, we can move our users closer to overall satisfaction, but there will still be listening needs that are not met by automation.

Often, unmet listening needs result in a fine-tuning appointment back in the clinic, but it may be difficult to explain and address the specific situation that caused problems. In Widex Evoke, we instead provide the end-user with a powerful tool to do fine-tuning in the situation, based on machine learning. To understand this feature, let us take a step back to better understand how AI and machine learning work.

Artificial Intelligence or Machine Learning?

In 1950, Alan Turing proposed the Turing Test (Mueller, 2016). The test is passed when a computer can communicate with a human, and the human believes they were not talking to a computer but to another human. In 1956, John McCarthy coined the phrase ‘Artificial Intelligence’, and a new field in computer science was born (John Paul Mueller, 2018). Artificial intelligence (AI) research flourished but was ineffective until around 1980. Then, between 1980 and 2000, integrated circuits moved computers, artificial intelligence, and machine learning from science fiction to science fact.

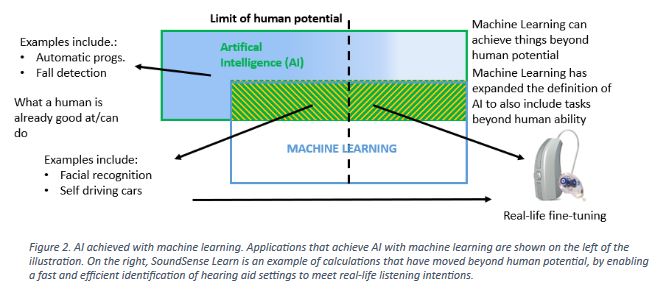

AI is the capability of a machine to imitate intelligent human behavior (Merriam-Webster, 2018). Importantly, just saying that a technology, a device, or an application has artificial intelligence does not say anything about that technology or about how it resolves challenges or problems. Machine learning can be used to achieve artificial intelligence by creating systems that can recognize complex patterns or make intelligent decisions based on data. Applications of machine learning are being used in self-driving cars and cameras with facial recognition. These two examples, driving a car and recognizing faces, are activities that humans are already good at. What does the future hold and what comes next? Real-life applications of machine learning and AI that go beyond what a human can achieve alone.

Widex has introduced SoundSense Learn in Widex Evoke, the world’s first hearing aid that uses real-time machine learning to empower end users to make adjustments based on their preferences and intentions in different environments. This is made possible by using a distributed computing approach, leveraging the power of a smartphone connected to a hearing aid to incorporate a live machine-learning application. The power of machine learning combined with a simple user interface frees the end user to focus on the quality of the sound without having to learn and manipulate numerous adjustment parameters.

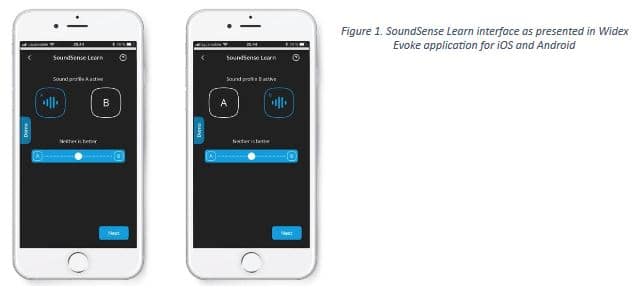

Via a simple interface, SoundSense Learn (fig. 1) can automatically learn and meet the end-user’s preferences and intentions. Using machine learning allows the hearing aid to achieve this auditory intention because it is driven by the end-user and their preference in that moment.

Widex SoundSense Learn implements a well-researched machine-learning approach for individual adjustment of HA parameters (Nielsen, 2014). This technique efficiently compares complex combinations of settings by collecting user input via a simple interface.

To help understand how efficient this process is, let us calculate how much manual human work it is replacing. For example, if we have three acoustic parameters – low, mid and high frequency – that can each be set to 13 different levels, we have a total of 2,197 combinations of the three settings. To sample and compare all these combinations in order to find the optimal setting would result in over 2 million comparisons to be completed: an impossible number of comparisons for a human to manage. However, because machine learning is integrated within this process, SoundSense Learn can reach the same optimal outcome in 20 steps or less, which is much faster and with much less effort (Townend, Nielsen, Balslev, 2018).

The simplicity of the user interface means that there is no need for the user to learn the underlying complex controls or parameters. The user’s task is just to focus on their perception of the sound in the moment and adjust it based on their preferences.

In Widex Evoke, SoundSense Learn can adjust the equalizer settings for immediate listening gratification, so we are not altering the work and programming that the dedicated hearing healthcare professional has put into the fitting. While the permanent programming of the hearing aid is not altered, the end-user still has the power, in real time, to easily refine their acoustic settings to meet their specific real-time listening intention. It is clear that we are entering the territory of collaboration between clinician, end-user and machines, and, with SoundSense Learn, beginning to go beyond what a human can achieve alone (fig. 2).

Data and Evidence

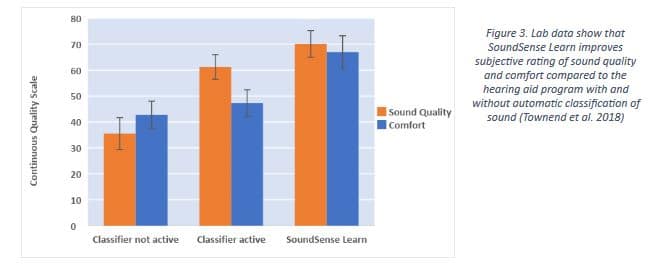

When tested, hearing aid programs created with SoundSense Learn showed subjective improvements in sound quality and comfort over hearing aid programs with or without an automatic classification system.

A double-blind experiment was completed using nine different sound samples with an auditory intention focus task. Subjects were asked to optimize sound quality or optimize listening comfort for pre-recorded sounds that they heard through hearing aids programmed to their hearing loss. Once the programs were created, subjects were, one week later, asked to compare these programs against the two hearing aid programs (with and without an automatic classification system) in a double-blind paradigm (Townend et al. 2018).

We saw from the results that SoundSense Learn increased both listener comfort and sound quality when rated against the hearing aid programs without personalization (fig. 3). 84% of participants preferred the hearing aid settings achieved by SoundSense Learn over the hearing aid with the automatic classifier activated. When evaluating sound quality using music samples, 89% of the participants preferred the settings based on SoundSense Learn (Townend, Nielsen, Balslev, 2018).

Our initial study showed that SoundSense Learn helped end-users increase sound quality and listening comfort in a laboratory setting based on individual auditory preferences.

Since launching Widex Evoke with SoundSense Learn, we have been collecting anonymous data that help us observe how users are making use of this feature in those areas of their day-to-day hearing life that can still present challenges. We already have some exciting and remarkable insights from our initial data.

When we look at the initial use sample of SoundSense Learn, approximately 10% of Widex Evoke users who are wearing hearing aid models that can access SoundSense Learn were using this feature on a regular basis (Widex internal data, 2018). We define regular users as those who first use SoundSense Learn to create and save a program and then use the same program again at least once, at least one week later. There are users that we have not accounted for in this way, because they are using SoundSense Learn to adjust their hearing aid settings but are not saving their programs.

The fact that some users are not using SoundSense Learn suggests to us that Widex Evoke is automatically providing for most of the varied listening situations users encounter, but that SoundSense Learn is a useful tool for specific situations.

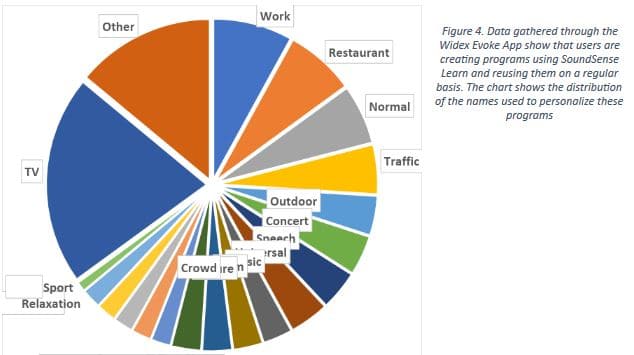

We can see (fig. 4) that the regular users are creating programs, tagging them with a name from the Widex Evoke app, and then reusing these programs again and again. In fact, this happened in over 1,600 separate programs in the dataset we analyzed for SoundSense Learn. The largest tagged groups of programs include TV, Work and Restaurant.

One area to highlight, where users create a program and reuse their preferred settings, is the workplace (141 programs). The workplace is one where users are generally satisfied, according to satisfaction ratings as published in a large hearing aid consumer survey (MarkeTrak 2015). However, even an 83% satisfaction rating for hearing aid performance in the workplace means that there is almost one out of five hearing aid users who is not satisfied with the sound in the workplace. We suggest that some of these dissatisfied users could be satisfied with SoundSense Learn, which allows them to find hearing aid settings to suit their challenges in the workplace.

Many work settings are indoor office-based with good signal-to-noise ratios (SNR), where we expect Widex Evoke to automatically provide the best sound. However, for some users, work is a highly variable setting with a more challenging SNR or a unique noise source, making it more complex for an automatic program to meet such variations. Also, some users may just want something different from the sound in their workplace, beyond the sound delivered automatically by Widex Evoke.

Whether the user is looking for more individual sound or the environment is more exotic may be unclear, but SoundSense Learn is a fast and effective answer to both challenges and enables the user to find their preferred individual settings.

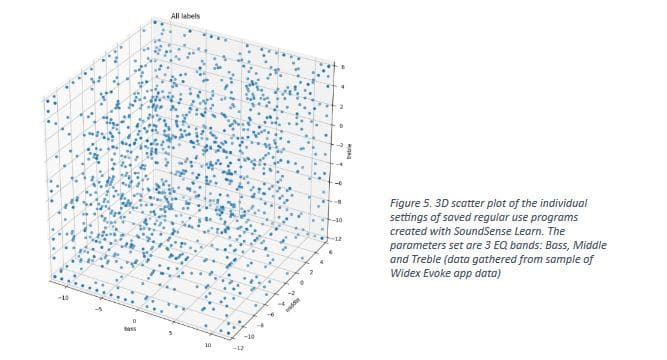

This interpretation is further reinforced when we investigate the data generated from using SoundSense Learn in the Widex Evoke app. Figure 5 shows the individual settings that make up each of the programs created and saved by users of SSL. It is clear to see that the programs being created and used are incredibly diverse, spread out over combinations of all three acoustic dimensions (low, mid and high frequency).

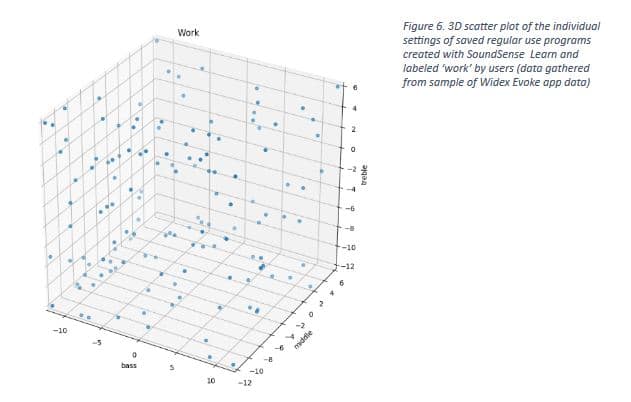

When we focus only on those programs created and labelled ‘Work’, the diversity of the pattern remains (fig. 6). This diversity of settings is not going to be met by a predicted automatic hearing aid program; it is only met when the individual listening intention is considered and used to find settings.

When we look again at MarkeTrak (2015), we can see that the largest satisfaction gaps remaining in hearing aids today are related to sound. A satisfaction gap is found when a metric is generally rated as satisfactory overall by many users but also has a small group of dissatisfied users. The areas of hearing aid sound with large satisfaction gaps include clarity of sound, naturalness of sound and fidelity/richness of sound.

Conclusion

So, while we still endeavor to make the most automatic hearing device possible, it seems that there will always be situations where the listening intention of the user is better met via powerful and effective personalization in the moment. Widex Evoke and SoundSense Learn change the way end-users interact with hearing aids, providing them with opportunities for immediate personalized improvement based on their intentions and preferences.

References:

- Aldaz, G., Puria, S., & Leifer, L. J. (2016). Smartphone-Based System for Learning and Inferring Hearing Aid Settings. Journal of the American Academy of Audiology, 27(9), 732–749.

- Daugherty, P. R. (2018). Human + Machine. Reimagining Work in the Age of AI. Boston, Massachusetts: Harvard Business Review Press.

- Hearing Industries Association. MarkeTrak 9: A New Baseline “Estimating Hearing Loss and Adoption Rates and Exploring Key Aspects of the Patient Journey” (March 2015)

- John Paul Mueller, L. M. (2018). Artifical Intelligence for Dummies. New Jersey: John Wiley & Sons Inc.

- Kuk, F.K (2017). Going BEYOND – A testament of progressive innovation. Hearing Review supplement. (January 2017)

Marriam- Webster, 2018 website accessed at: https://www.merriam-webster.com/dictionary/artificial%20intelligence

- Mueller, J. P. (2016). Machine Learning for dummies . New Jersey : John Wiley & Sons Inc.,

- Nielsen, J. B. (2014). Systems for Personalization of Hearing Instruments – A Machine Learning Approach. Danish Technical University (DTU).

- Townend O, Nielsen JB, Ramsgaard J. Real-life applications of machine learning in hearing aids. Hearing Review. 2018;25(4):34-37.

- Townend O, Nielsen JB, Balslev D. SoundSense Learn—Listening intention and machine learning. Hearing Review

About the Authors:

James W. Martin, Jr, AuD, is the Director of Audiological Communications at Widex USA, Hauppauge, NY. Oliver Townend is the Head of Audiology Communications at Widex A/S in Lynge, Denmark.